"Don't Trust Everything You See On the Internet" (is not new advice)

The rise of AI media won't make identifying the truth any more difficult than it already is.

“A culture's ability to understand the world and itself is critical to its survival. But today we are led into the arena of public debate by seers whose main gift is their ability to compel people to continue to watch them.” ― George Saunders, The Braindead Megaphone

Lately, you’ve probably been hearing folks say things like: “As AI gets better, we won’t be able to tell what’s real!” There is a fear that as generative AI technology improves, our feeds will clog up with misleading, low-effort, shallow and manipulative content that’s hard to distinguish from the authentic stuff. That in order to find something real we’ll have to wade through mountains of mass-produced clickbait, ragebait, engagement bait, ads, and millions of other posts attempting to do something other than educate us or connect our souls with that of another human.

People are deeply concerned that this might happen.

If you are one of these people dear reader, allow me to allay your fears! The world of social media you so are afraid of —where images and video can’t be trusted to represent reality, where psychological manipulation is paramount and propaganda is the rule, not the exception— that world is not approaching fast on the horizon!

It’s here! Y’all living in it!

In this essay I hope to make clear these three points:

Social Media is not “the Internet”.

There has never been a strong reason to trust without verifying anything posted to social media.

Advances in generative AI will not change points 1 and 2.

Let’s begin!

A Bullshit History Lesson

The following statistic is completely anecdotal (which means you can’t tell me I’m wrong) but there was a brief period of time from the birth of “Web 2.0” to until about 2013 where a critical mass of the content found in the “main” feeds of the various social media platforms came with no overt reasons to doubt the earnestness of the person posting it. As evidence that this time indeed existed, here is Buster Baxter in 2005 completely shook to his core by the idea that someone might fabricate an “Am I the Asshole” ragebait post on Reddit to stealth advertise a new dating app:

When the World Wide Web was new, the grown-ups were extremely skeptical of it. This is because they understood how easy it would be for someone to Go On It And Tell Lies. It took some years before it was able to gain society’s collective trust. People used to say “Where did you read that? Wikipedia?” as a fun way of saying “You are an ignorant fool to believe such nonsense” but over time we learned that just because someone could tell lies, most people didn’t, and when the studies showed that Wikipedia was actually pretty trustworthy after all, the techno-optimists among us celebrated. It seemed that left to our own devices, humanity is mostly good! Rock n’ roll, baby!

This era of (false) trust in platforms led to complacency. While the Web had liars lying on it, the amount, fashion and tellers of the lies more or less mirrored what one might expect to encounter in the real world. Everyone surfin’ the Web was already well aware that politicians spun facts and advertisements glorified the products on display. In other words: The Web had an amount and manner of lying that a well-adjusted, reasonably cautious person was already equipped to handle.

Internet platforms and their users were equipped to handle occasional liars. What they were not equipped to handle was constant streams of bullshit.

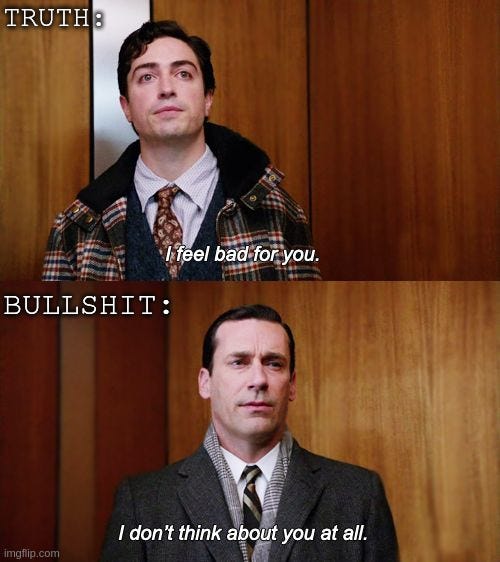

Bullshit is not “lying”. In order to be a liar, one must know the truth. Liars have a relationship with the truth. But bullshit requires no such relationship. A bullshitter, as described by Harry G. Frankfurt in his 2005 book On Bullshit:

…is neither on the side of the true or the side of the false. His eye is not on the facts at all. He does not reject the authority of the truth, as the liar does, and oppose himself to it. He pays no attention to it at all. By virtue of this, bullshit is a greater enemy of the truth than lies are.

Here it is again in meme form for the kids:

For a while, the for-profit social media platforms that I’m always complaining about —while not without problems— were actually semi-decent places to connect with like-minded strangers and have constructive conversations. The algorithms, inasmuch as they existed back then, rewarded “engagement”. Back then, “engagement” almost always implied some form of two humans interacting authentically. Remember when the major complaint about Instagram was how so many people posted pictures of their food? When Instagram’s biggest annoyance was that people were being too authentic? Posting a photo of one’s dinner plate to Instagram these days? It feels charming in its naivete!

When a place is full of people behaving authentically it becomes open season for the bullshitter. Remember, the goal of the bullshitter is not to spread lies, but to overwhelm the means of communication. The goals of the bullshitter are unrelated to the thing they are bullshitting about. Their words can be a glue trap that authentic people get stuck on.

This means that a bullshitter, unlike a liar, cannot be defeated or shooed away with Facts And Logic. To engage with a bullshitter is to create more bullshit. And because the social media platforms’ profitability was/is tied to engagement, they were/are unable to do anything about bullshitters lest they risk the waking nightmare that is “making slightly less money”.1

The only reason corporate social media “worked” for the time it did was because platforms relied on human-made content, and that’s just not something they rely on as much anymore. Because most people are mostly authentic most of the time, most of the content was mostly authentic too. That gave the public an impression that the platforms were places of authenticity, when in reality any authenticity was only a byproduct. And as platforms shifted from being “socially-sorted media” to “algorithmically-sorted media”, the majority of authentic content (that again, was never the real priority) fell by the wayside.

A few platforms held on longer than most. Wikipedia has managed to stay mostly-good, and that’s really just because its nonprofit status presents different financial incentives that don’t require “engagement” (meaning its value is maintained no matter how quickly or slowly users add data). Also on Reddit, human volunteer moderators were able to recognize and remove bullshit on a scale automated systems could not manage. This is why for a while you’ve been able to add “reddit” to your Google search in order to find more authentic results. Even Google recently acknowledged the value of this and is giving Reddit a bump in its results:

Reddit plays a unique role on the open internet as a large platform with an incredible breadth of authentic, human conversations and experiences […] Over the years, we’ve seen that people increasingly use Google to search for helpful content on Reddit to find product recommendations, travel advice and much more.

Of course, Reddit —the company— also hosts entire “communities” dedicated to spreading bullshit. Combine that with the fact that Reddit itself is inserting more algorithmically-chosen content into feeds, and an increasing amount of that content is AI generated spam, and you get the dying mall it is today.

Automated Bullshit

“Truth” cannot be automated by machines. This is because what people consider to be true requires a person to consider it. Do not take this in a woo-woo “reality is what you make of it” sort of way. Instead, recognize that “truth” is not just another word for “the facts”, but is an interpretation of them made for humans. Because the act of interpreting requires an interpreter —a person— to do it, “the truth”, unlike “a fact”, cannot exist unless it is being told.

Let’s use journalism as an example to explore this a bit more. When we say we “trust” a journalist, newspaper or other publication to report the news, we are not saying we expect them to only deliver perfect facts with unwavering consistency; we are saying we expect them to be honest with us about their perspective. Even some quasi-dishonest messengers, such an actor acting, or a billboard advertising, can still communicate useful information as long as we know who it’s coming from. Furthermore, a human being —honestly describing reality as seen from their perspective— will always be telling the truth; no matter how misguided their reasoning or completely incorrect they may be about the facts.

The news media frequently struggles with this. Is someone who makes no effort to know the truth a “liar”? Technically no. But that doesn’t make such a person honest either. In technical terms, such a person is a bullshitter.

And while we’re technically speaking, generative AI is a bullshitter too2. No matter how advanced the technology becomes, no matter how indistinguishable its outputs get from what a human might say, generative AI can never honestly tell you why it said what it said. It can never honestly say “I don’t know, but here’s a guess” or answer a question with “based upon my understanding” it cannot share its perspective of reality because it has no perspective.

The biggest problem facing using large language model AI’s use as fact-giving machines is that they sound convincing even when incorrect. When I first learned about GPT my thought was “Golly, if they can figure out how to make AI not hallucinate, this is world-changing!” But this “problem” of “hallucinating” is not a bug, it’s central to the process of how the technology works. What the companies call “hallucinating” is just a PR-friendly way of describing “bullshitting”. Sometimes it outputs facts, sometimes it doesn’t, but “truth” is unrelated to the purpose of its “speech”. The bullshitting “problem” is fundamental to the way LLMs work. They simply cannot fact-check because to do so means analyzing one’s own thought process, something it doesn’t have (at least not in the way a person does). In order to fact check, there needs to be a checker. A checker cannot exist without a perspective to check from.

Adam Savage of Mythbuster’s fame gave one of the best summations of this I’ve yet seen when asked how he felt about the use of generative AI in film making:

The only reason I’m interested in looking at something that got made, is because that thing that got made was made with a point of view. The thing itself is not as interesting as the mind and heart behind the thing. And I’ve yet to smell in AI what smells like a point of view.

Because generative AI cannot have a relationship with the truth, because it has no perspective of its own, it cannot tell the truth. It can say facts, but it cannot tell you why it believes something to be a fact. It does not understand what a fact is. This is why generative AI definitionally cannot be honest or lie to us. It can only produce bullshit.

In this post I attempted to illustrate in easy-to-understand terms how generative AI software like GPT and Midjourney are not programmed to communicate, but to “bypass our bullshit detectors”, check it out! It may clear some things up.

So how can we know what’s real?

The way we always have! Trust your senses and trust places that encourage people to be authentic. Listen to them. Ask questions. Bullshit is by definition hard to detect, so don’t get your sense of reality from places that tolerate it.

Do you think (real) news organizations just pluck a video from Twitter and proclaim its contents to be factual? No, they send out cameras of their own, investigate the sources and seek third party confirmation because trust is more integral to their business model than engagement. Even Wikipedia (not a traditional news publisher) has explicit standards for what they allow to be posted. And it works pretty well. Though it remains to be seen if a few well-funded bullshitters using generative AI might be able to post their BS faster than Wikipedia’s human moderators can detect and remove it.

But this isn’t just about the news. Netflix recently aired a documentary that used AI generated images to “enhance the narrative”. Is it lying? Not exactly. But it is some bullshit. Does it mean we should dismiss everything Netflix publishes? Probably not yet. And while this incident certainly has affected my trust in them, I, being a person who basically never blindly clicks “play” on whatever is suggested, was not expecting rigorous levels of truth-telling from a low-budget Netflix documentary to begin with.

I also think we are lucky to be able to experience AI software in an “imperfect” state, because it allows us to more easily notice when it’s being employed. In the case of the Netflix documentary, we are easily able to identify the hallucination bullshit because of, as usual, the hands:

The companies that make this stuff claim that one day it will be indistinguishable from human-made content. I still question that claim, but if it turns out to be true, my concern is not that humans won’t be able to smell bullshit, but that a majority of people won’t even try.

While biggest purveyors of bullshit by volume —social media companies— will probably collapse as a result of its accelerating automation, something I’ve learned about the Internet this past decade is that a distressing amount of real people will willingly consume the bullshit if it conforms to their preferred worldviews. Human-powered systems like democracy simply can’t function as intended if a critical mass of participants are unwilling to accept the same reality. But I think (hope) that most people, most of the time, want to know the truth, even if the truth is unkind to them, or forces them to reevaluate their opinions.

Which means that the problem we face is not that humans don’t posses the capability or desire to tell what’s real; it’s the people don’t seem to know how to do it. In 2020 the percentage of U.S. adults who say they regularly get news from TikTok was 3%. By 2023 it quadrupled:

Their news. Their interpretation of reality and the world around them. They get it from TikTok. This is, IMO, not a great trend. And frankly, I don’t know how free society can correct course, besides teaching their citizenry critical thinking skills3 and regular folks regularly pointing out that TikTok’s business model (along with all the other companies) just does not rely on a relationship with the truth. Algorithmic media does not lie, but it is not honest, either. It should —like “reality” TV— be viewed with an understanding that it is entertainment. But not everyone seems to view it that way. Yet.

One Last Thing So We Can End Optimistically

I know this is a long post, but I have one last interesting thing on the subject that gives me a bit of hope: Facebook recently did an accidental experiment wherein it tweaked its algorithm to de-prioritize news and politics. This had two interesting effects: One, it made Facebook into a weird AI-garbage filled space (As I predicted would happen) which isn’t great but also oh well. But the second thing it did was completely destroy the traffic —down 90% in some cases— to a lot of political websites that exploited Facebook’s algorithm’s penchant for “engagement” to boost their (let’s be honest) ragebait-style propaganda. Now, your first thought might be that Facebook is “censoring” or “punishing” these websites, but remember, all links to news and politics were de-prioritized, and yet the “boring”, non-sensational news outlets were minimally affected. This implies that most of the traffic to the propagandist sites was coming via Facebook, not from people directly seeking it out. Without Facebook forcing button-pushing ragebait right in front of users’ faces, 90% of the them didn’t care to find it on their own.

This gives me hope that a lot of the divisive vitriol we see shared online is just, well, bullshit. And that most of us appear able recognize it as such, even if it’s sometimes hard to ignore. Facebook’s “experiment” shows that most people given a choice, don’t want to seek out that kind of material, we’re just really bad at ignoring it when it’s put in front of us. It appears like the business model of “make up a bunch of triggering bullshit because it drives traffic to your website where you can sell ads” doesn’t work so well without The Algorithms™ to force that content upon users.

As AI advances, the volume of bullshit will likely continue to grow. But if you’re worried about being able, or unable, to know what’s real, stop worrying. And not because we’ve already lost, but because you already know how to win. The important thing to focus our attention on is not who’s lying, but who’s being honest. Identifying those people is a skill, it takes patience, effort, and critical thinking. It’s also nothing new.

Literal chills. My god, the horror. It’s painful to even imagine.

Cory Doctorow (who coined the term “enshittification”) uses the term “botshit” to describe Generative-AI spam clogging up the ‘tubes.

Shout out to the wonderful people at the News Literacy Project

Thank you for introducing me to George Saunders's quote, crazy how accurate it is to this day. Which, I suppose, was the point.

This was great.Always good to read your stuff. Love how you broke down bullshit. One point of departure for me though. I can see why people lately trust TikTok as a media source. They see a genocide unfolding there that they do not see on the news. Or to quote a recent tweet by ARX-Han: "In virtually every other context, the smartphone is an engine of state surveillance and control, and yet in the case of an ongoing genocide, it suddenly inverts in function and the consent manufacturing apparatus spins out of control. Truly a dual-use technology." But other than that, I wish saw more posts from you. Great to read you again.